An ideal translated video should have the following characteristics: accurate and appropriately timed subtitles, dubbing voice that matches the original, and perfect synchronization between subtitles, audio, and video.

This guide will walk you through the four key steps of video translation, providing best practice recommendations for each stage.

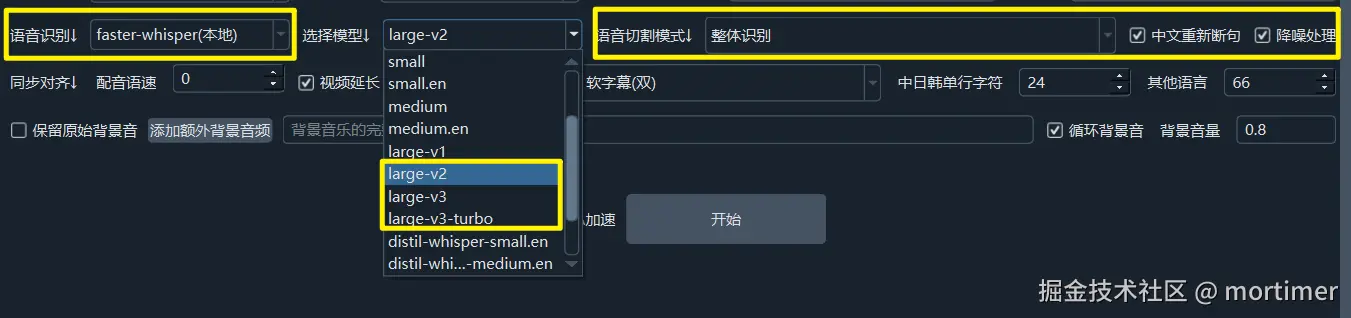

Step 1: Speech Recognition

Goal: Transcribe the audio from the video into a subtitle file in the original language.

Corresponding Control Element: "Speech Recognition" row

Best Configuration (Non-Chinese):

- Select

faster-whisper(local) - Choose model

large-v2,large-v3, orlarge-v3-turbo - Speech Segmentation Mode:

Overall Recognition - Check

Preserve Original Background Sound(more time-consuming)

- Select

Best Configuration (Chinese):

- Select

Ali FunASR - Speech Segmentation Mode:

Overall Recognition - Check

Preserve Original Background Sound(more time-consuming)

- Select

Best Configuration (Less Common Languages):

- Select

Gemini Large Model Recognition

- Select

Note: Processing speed will be extremely slow without an NVIDIA graphics card or if CUDA acceleration is not enabled. Insufficient video memory can lead to crashes.

Step 2: Subtitle Translation

Goal: Translate the subtitle file generated in the first step into the target language.

Corresponding Control Element: "Translation Channel" row

Best Configuration:

- Preferred: If you have a VPN and understand how to configure it, use the

gemini-2.5-flashmodel (Gemini AI Channel) in Menu - Translation Settings - Gemini Pro. - Second Best: If you don't have a VPN or don't know how to configure a proxy, select

DeepSeekin "Translation Channel."

GeminiAI usage instructions: https://pyvideotrans.com/gemini.html

- Preferred: If you have a VPN and understand how to configure it, use the

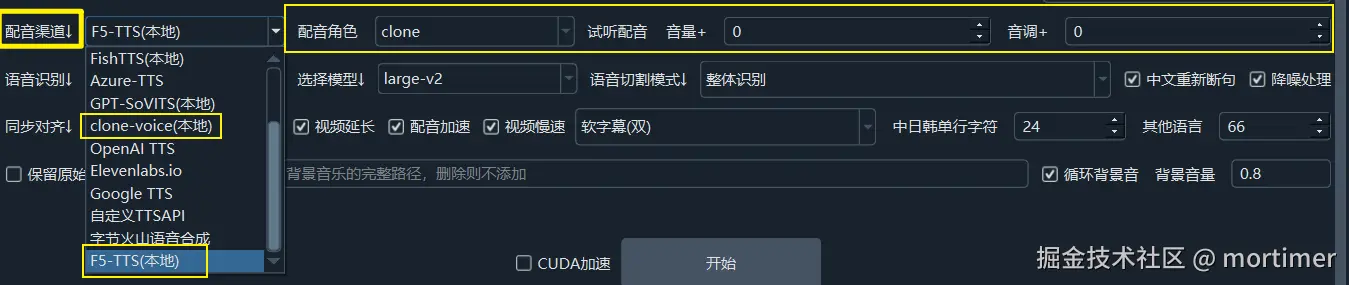

Step 3: Voice Dubbing

Goal: Generate dubbing based on the translated subtitle file.

Corresponding Control Element: "Dubbing Channel" row

Best Configuration:

- Edge-TTS: Free and supports all languages

- Chinese or English:

F5-TTS/Index-TTS (local) - Japanese/Korean:

CosyVoice (local)

You need to install the corresponding

F5-TTS/CosyVoice/clone-voiceintegration package. See the documentation: https://pyvideotrans.com/f5tts.html

Step 4: Subtitle, Dubbing, and Video Synchronization

- Goal: Synchronize the subtitles, dubbing, and video.

- Corresponding Control Element:

Synchronizationrow

- Best Configuration:

- When translating from Chinese to English, you can adjust the

Dubbing Speedvalue (e.g.,10or15) to speed up the dubbing because English sentences are typically longer. - Select the

Dubbing AccelerationandVideo Slowdownoptions to force alignment of subtitles, audio, and video.

- When translating from Chinese to English, you can adjust the

Output Video Quality Control

- The default output uses lossy compression. For lossless output, set

Video Transcoding Loss Controlto 0 in Menu - Tools - Advanced Options - Video Output Control Area:

- Note: If the original video is not in MP4 format or uses embedded hard subtitles, video encoding conversion will cause some loss, but the loss is usually negligible. Improving video quality will significantly reduce processing speed and increase the output video size.