Google Colab is a free cloud-based programming environment. You can think of it as a computer in the cloud that can run code, process data, and even perform complex AI calculations, such as quickly and accurately converting your audio and video files into subtitles using large models.

This article will guide you step-by-step on how to use pyVideoTrans on Colab to transcribe audio and video into subtitles. Even if you don't have any programming experience, that's okay. We'll provide a pre-configured Colab notebook, and you just need to click a few buttons to complete it.

Prerequisites: Internet Access and a Google Account

Before you start, you'll need two things:

- Internet Access: Stable internet access is required to access Google Colab and download necessary files.

- Google Account: You'll need a Google account to use Colab. Registration is completely free. With a Google account, you can log in to Colab and use its services.

Make sure you can open Google: https://google.com

Open the Colab Notebook

After ensuring that you can access the Google website and log in to your Google account, click the following link to open the Colab notebook we have prepared for you:

https://colab.research.google.com/drive/1kPTeAMz3LnWRnGmabcz4AWW42hiehmfm?usp=sharing

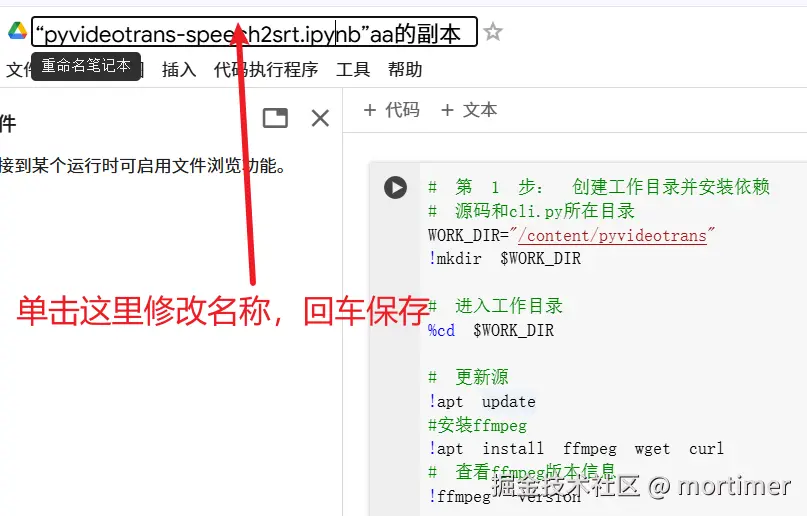

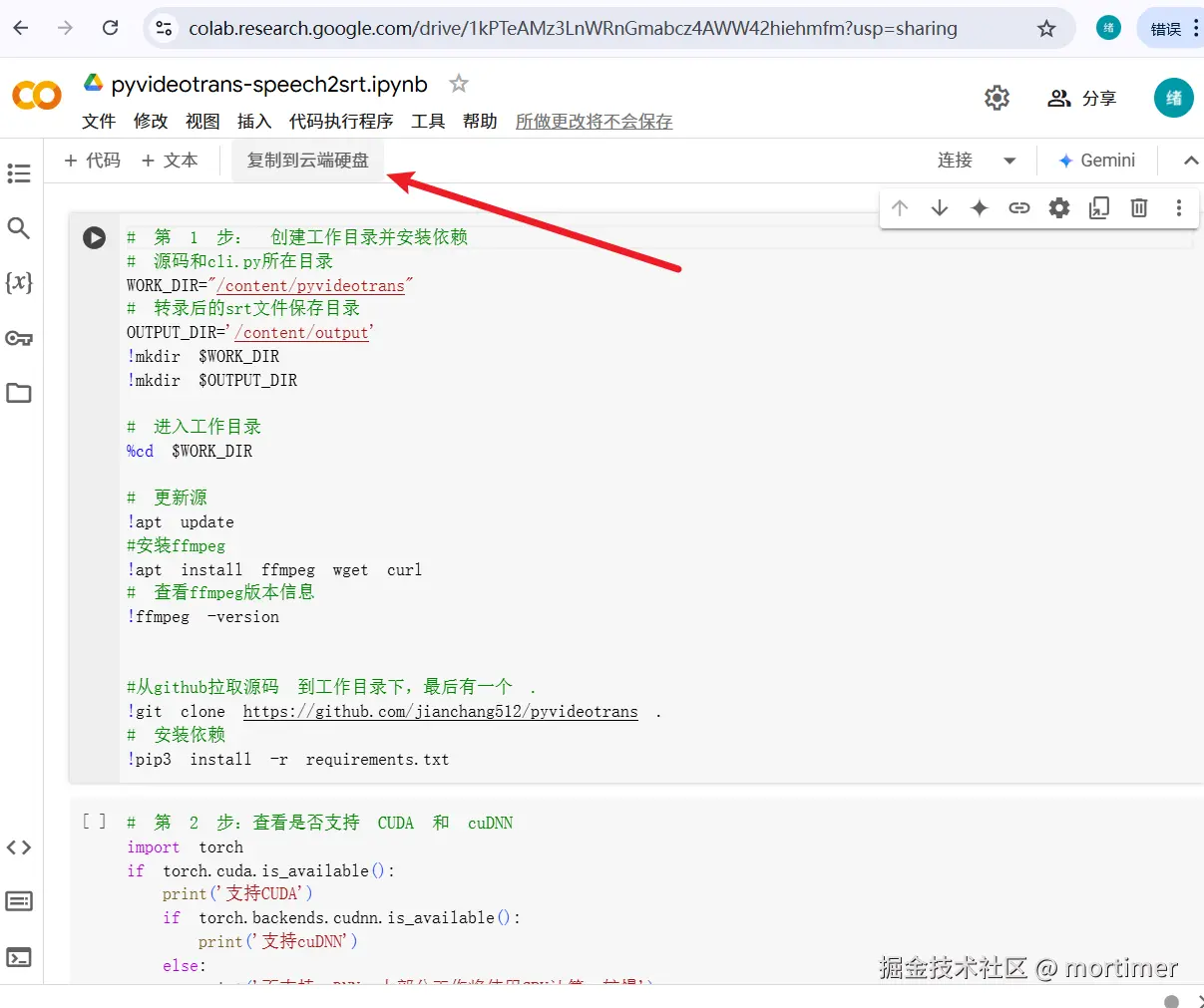

You will see an interface similar to the image below. Since this is a shared notebook, you need to copy it to your own Google Drive before you can modify and run it. Click "Copy to Drive" in the upper left corner, and Colab will automatically create a copy for you and open it.

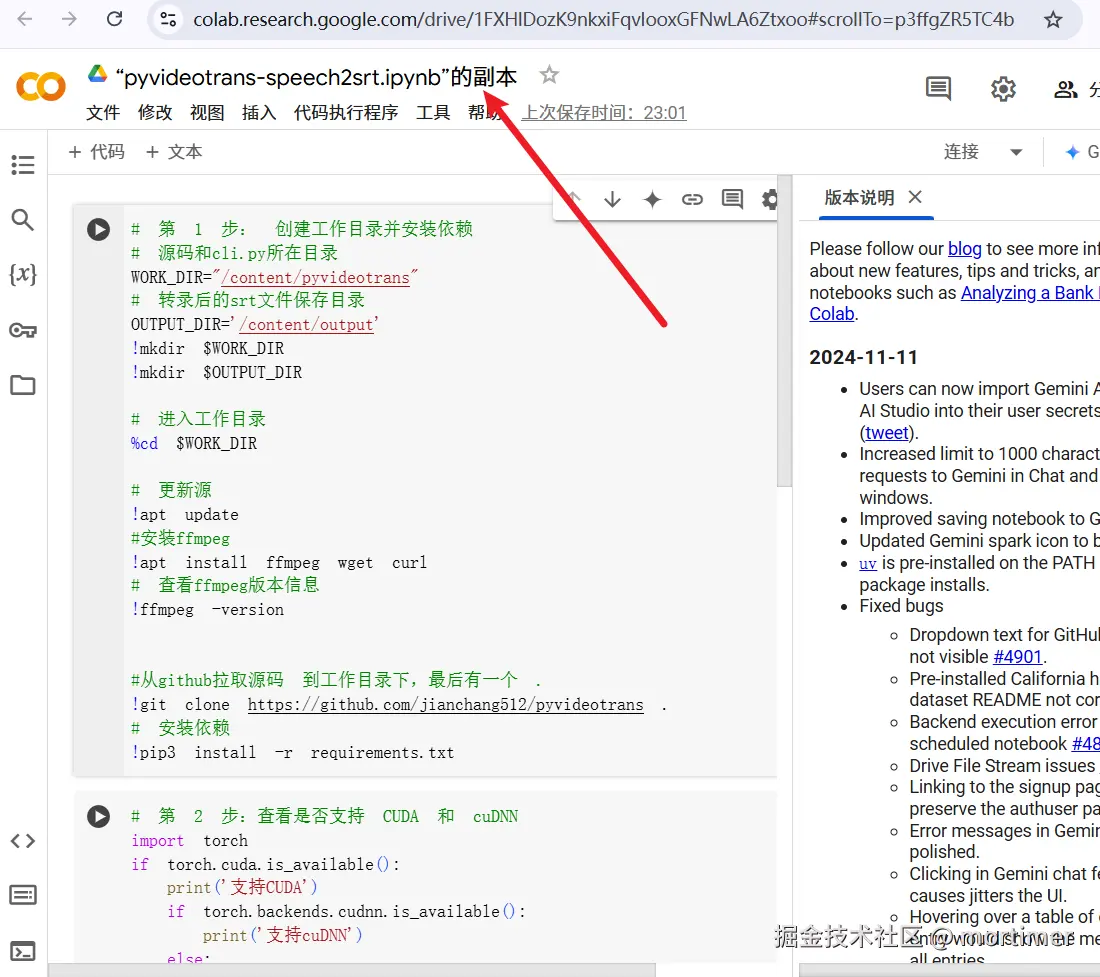

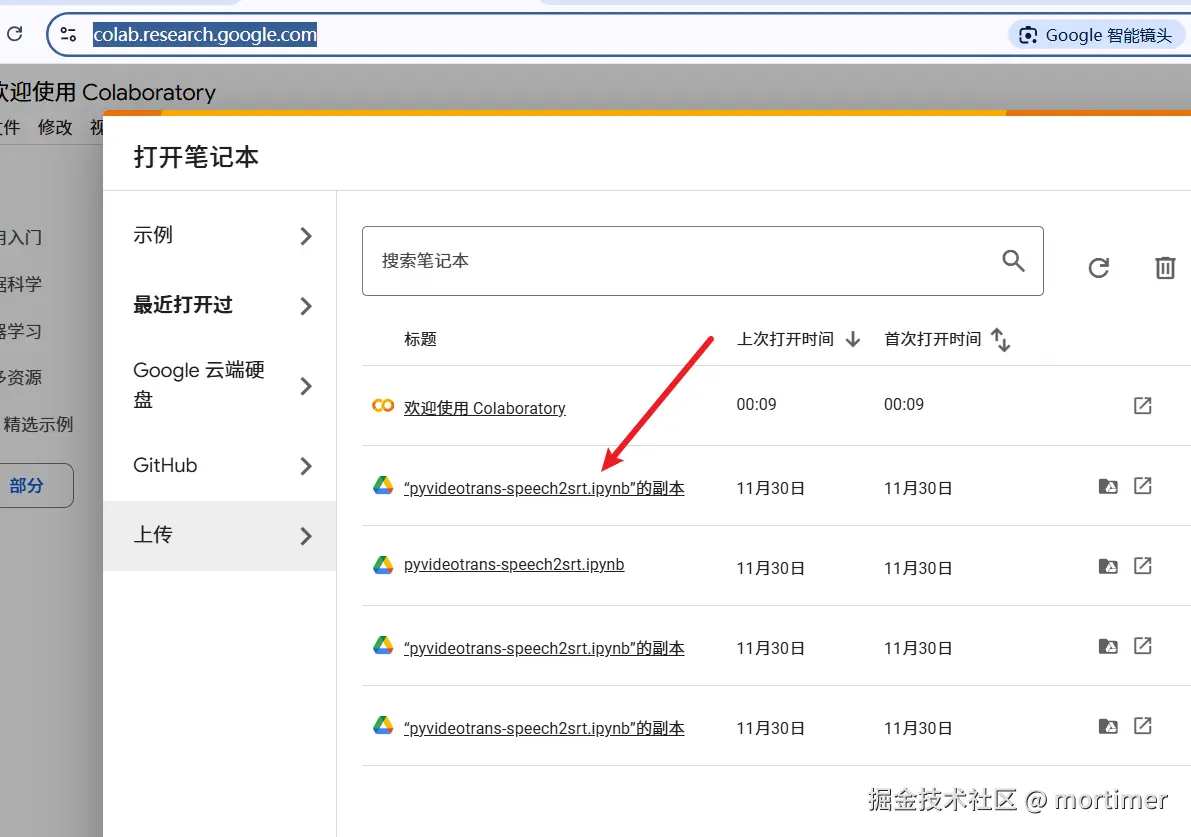

The following image shows the created page:

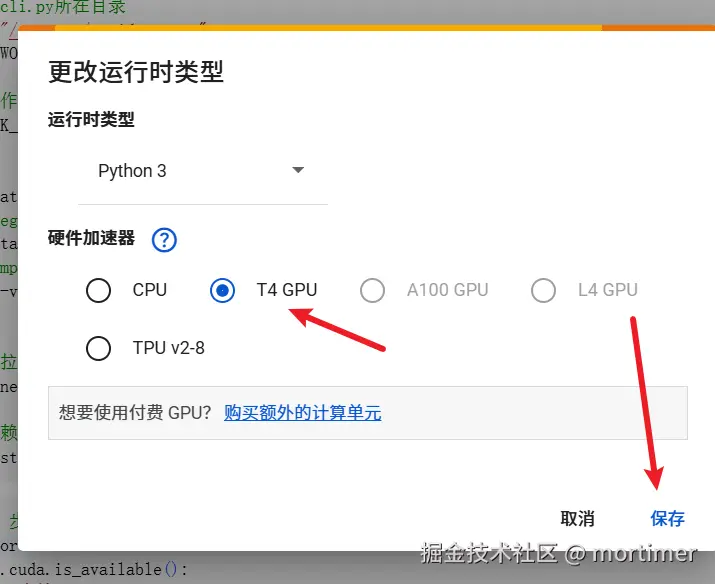

Connect to GPU/TPU

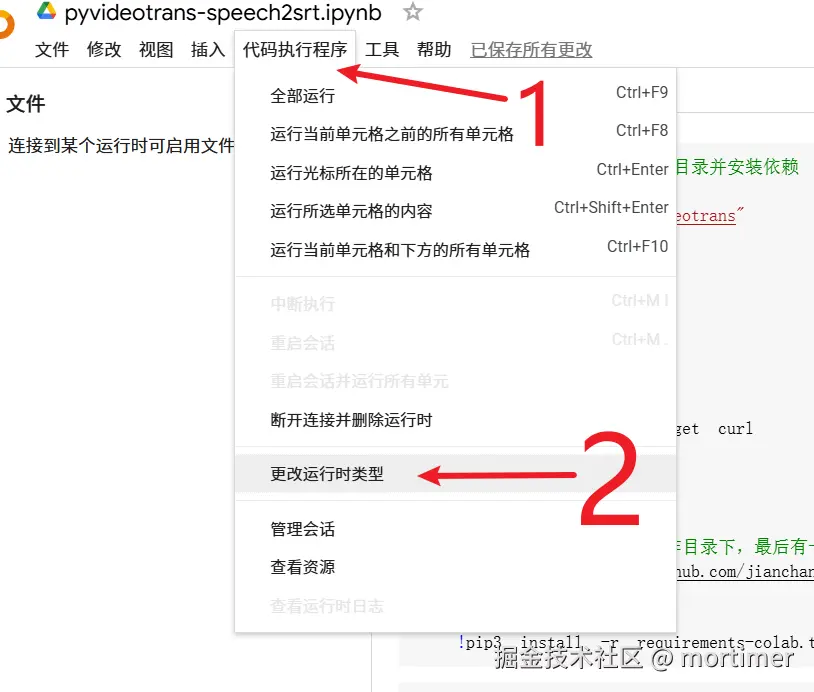

Colab defaults to using the CPU to run code. To speed up transcription, we need to use a GPU or TPU.

Click "Runtime" -> "Change runtime type" in the menu bar, and then select "GPU" or "TPU" in the "Hardware accelerator" drop-down menu. Click "Save".

Save it. If a dialog box pops up, select "Allow" or "Agree".

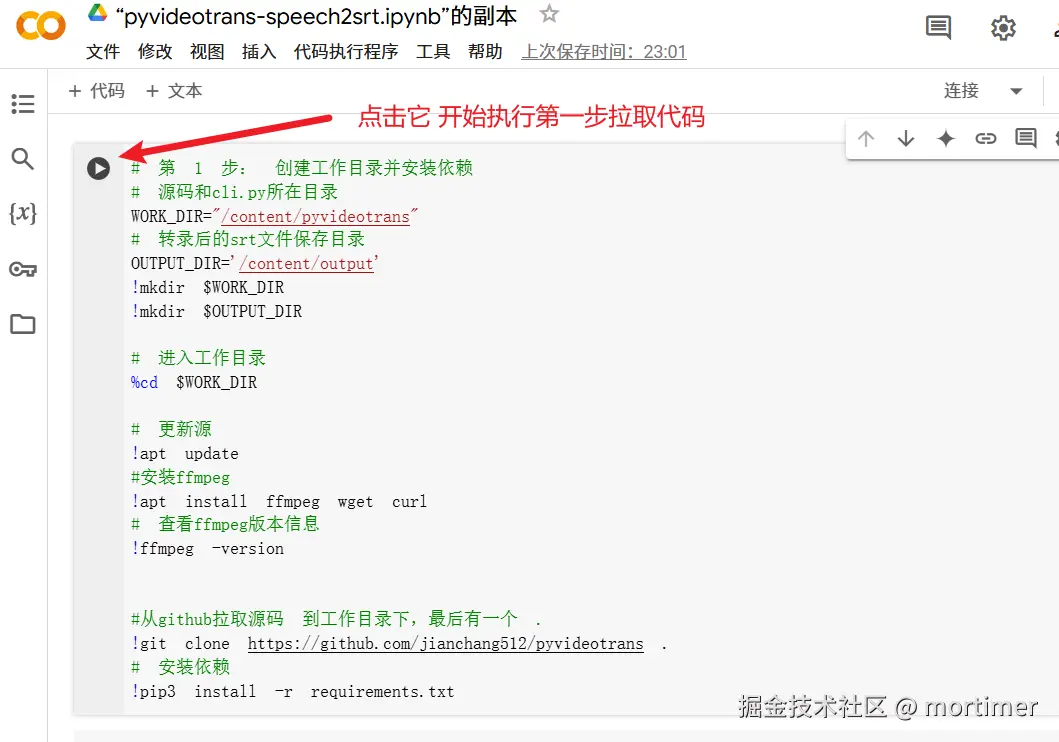

It's very simple to use, divided into three steps.

1. Fetch the Source Code and Install the Environment

Find the first code block (the gray area with a play button) and click the play button to execute the code. This code will automatically download and install pyvideotrans and other software it needs.

Wait for the code to finish executing, and you will see the play button turn into a check mark. Red error messages may appear during the process, which can be ignored.

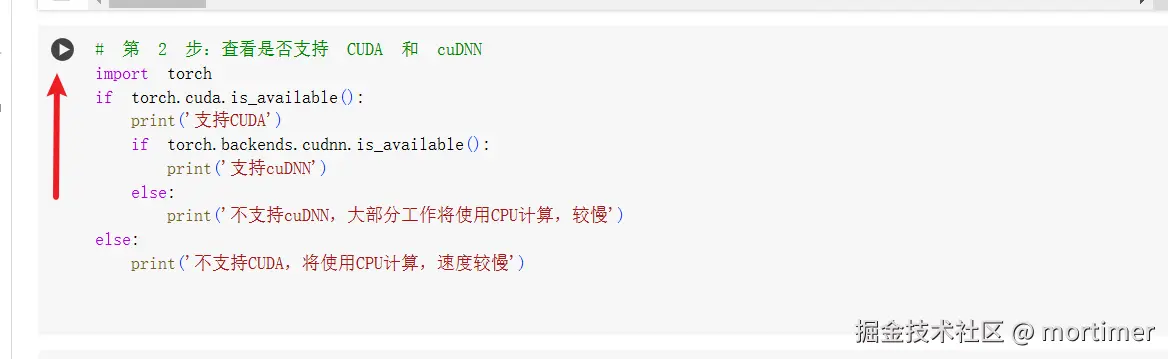

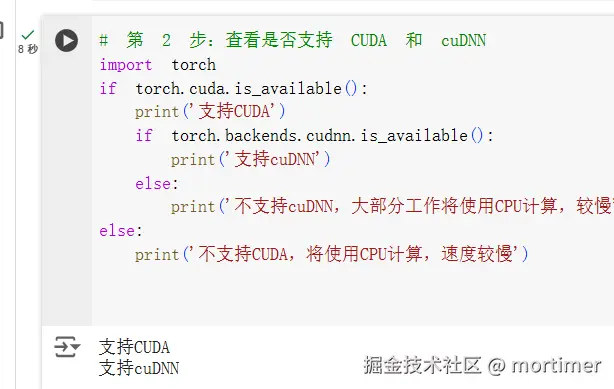

2. Check if GPU/TPU is Available

Run the second code block to confirm whether the GPU/TPU is successfully connected. If the output shows CUDA support, it means the connection is successful. If the connection is not successful, please go back and double-check whether you are connected to the GPU/TPU.

3. Upload Audio and Video and Perform Transcription

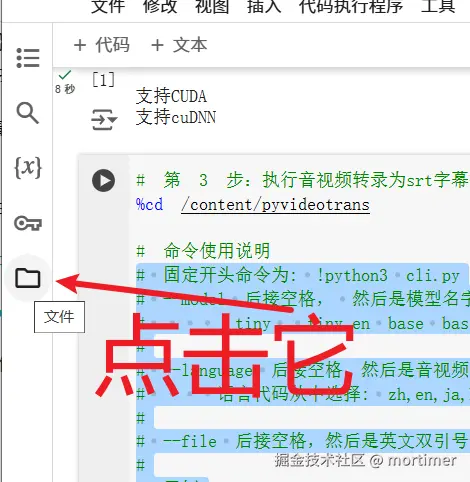

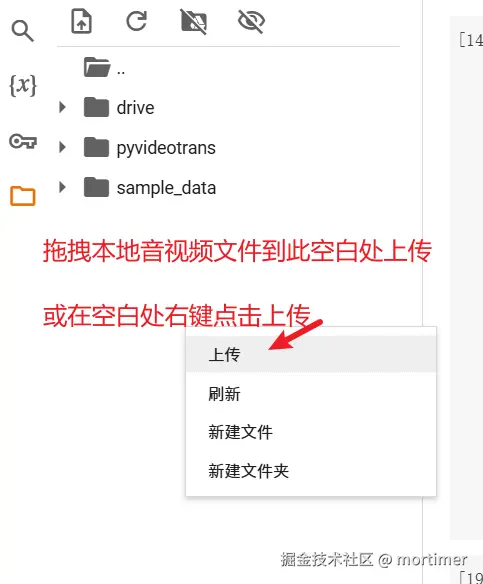

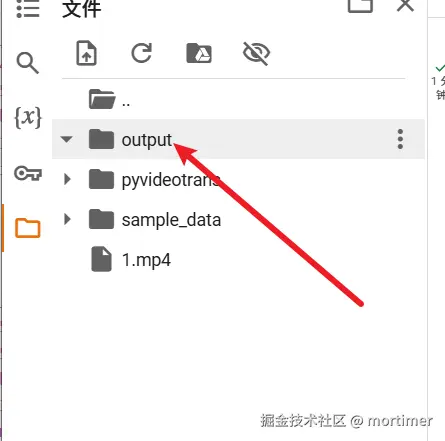

- Upload File: Click the file icon on the left side of the Colab interface to open the file explorer.

You can directly drag and drop your audio and video files from your computer to the blank space of the file explorer to upload.

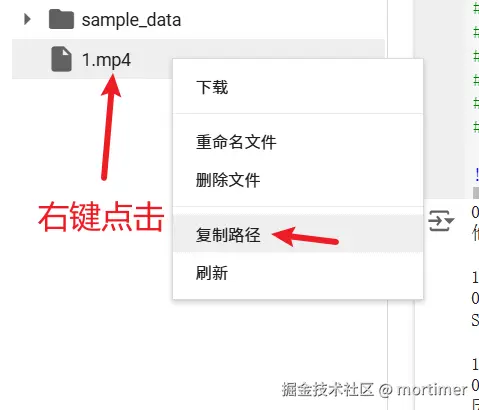

- Copy File Path: After the upload is complete, right-click on the file name and select "Copy path" to get the complete file path (for example:

/content/your_file_name.mp4).

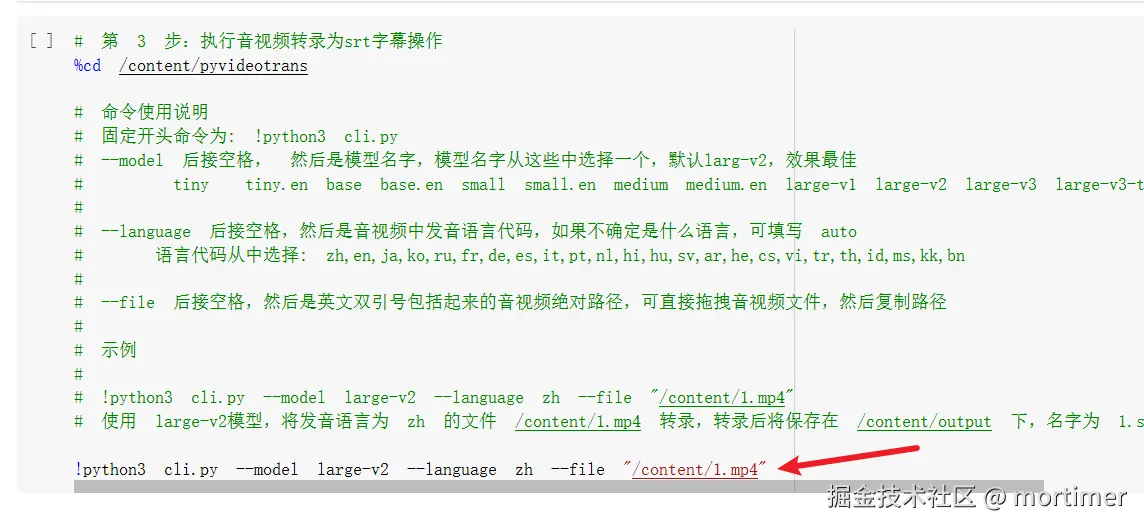

- Execute Command

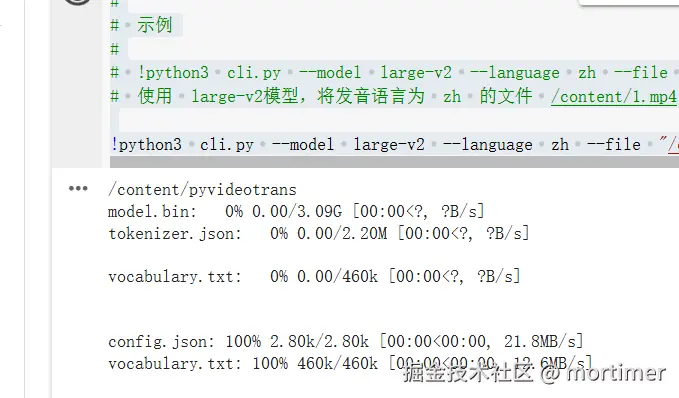

Take the following command as an example:

!python3 cli.py --model large-v2 --language zh --file "/content/1.mp4"

!python3 cli.pyThis is a fixed starting command, including the exclamation mark, which is indispensable.

cli.pycan be followed by control parameters, such as which model to use, what language the audio and video are in, whether to use GPU or CPU, and where to find the audio and video files to be transcribed. Only theaudio and video file addressis required, and the rest can be omitted, using the default values.

Suppose your video name is 1.mp4. After uploading, copy the path, and pay attention to enclosing it in English double quotes to prevent errors caused by spaces in the name.

!python3 cli.py --file "Paste the copied path here" After pasting and replacing, it becomes: !python3 cli.py --file "/content/1.mp4"

Then click the execute button and wait for it to finish. The required model will be loaded automatically, and the download speed is very fast.

The default model is

large-v2. If you want to change it to the large-v3 model, execute the following command:

!python3 cli.py --model large-v3 --file "Paste the copied path"If you also want to set the language to Chinese, execute the following command:

!python3 cli.py --model large-v3 --language zh --file "Paste the copied path"

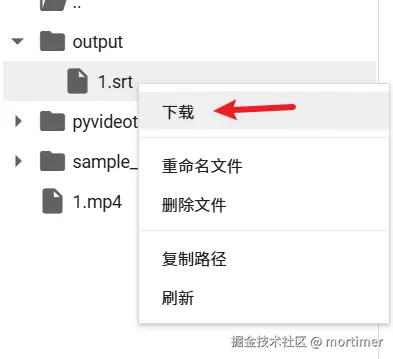

Where to Find the Transcription Results

After the execution starts, you will find an output folder in the folder list on the left. All transcription results are here, named after the original audio and video name.

Click on the output name to view all the files within it. Right-click on a file and click "Download" to download it to your local computer.

Precautions

- Stable Internet is important.

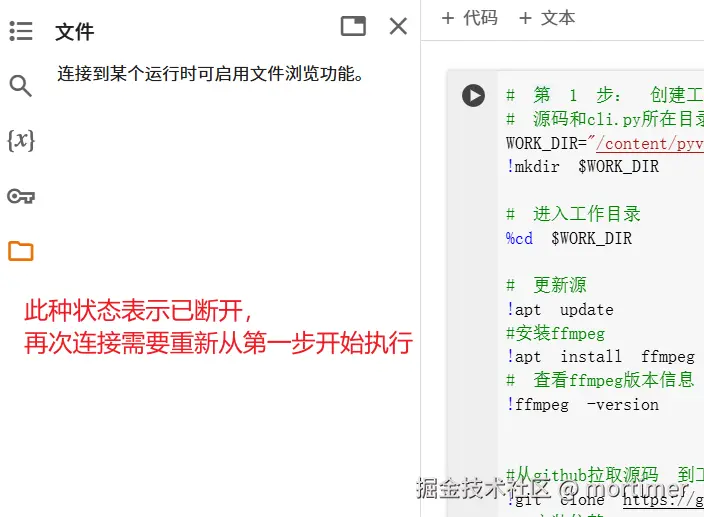

- The uploaded files and generated SRT files are only temporarily stored in Colab. When the connection is disconnected or the Colab free trial expires, the files will be automatically deleted, including all the source code pulled and all the dependencies installed. Therefore, please download the generated results in time.

- When you open Colab again, or when the connection is disconnected and reconnected, you need to start from the first step again.

- If you close the browser, where can you find it next time?

Open this address: https://colab.research.google.com/

Click on the name used last time.

- As shown in the figure above, if the name is difficult to remember, how to modify it?